Social media platforms are crucial in international communication and data Sharing. Although these platforms are adopted all over the world but the language obstacles still exist which causes problem in communication. Here real-time language translations supported by machine learning algorithms plays an important role. It allows users to send messages , posts and comments in their own language but it convert the messages , posts and comments from other language to that language the user can understand.

Recently, by utilizing a lot of data and complex algorithms, machine learning approaches have completely shifted natural language processing (NLP) from traditional sequence modeling based methods to neural models. This Paper is a deep examination of these methods and their utilization in real-time translation over social networks.

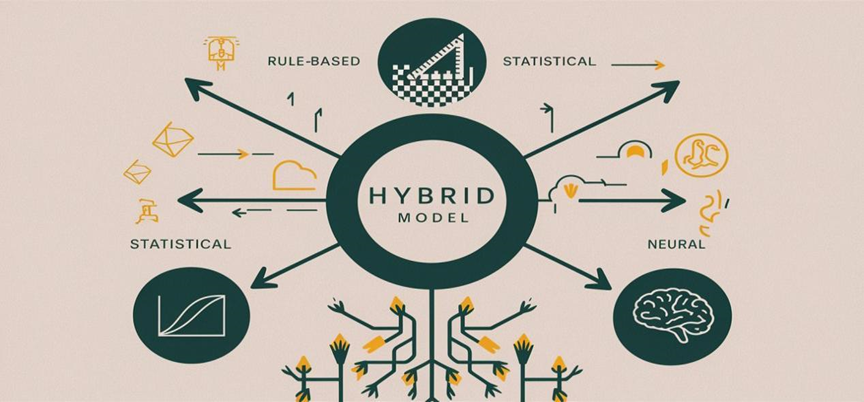

Neural Machine Translation is a milestone in evaluation of language translation. Neural Machine translation models are built on neural networks which have ability to take a whole sentence as input and convert it into another language at once including all complex features and dependencies. Neural Machine Translation process the input text more fluently and provide more accurate translation than rule-based and Statistical methodologies. It involves to models which are:

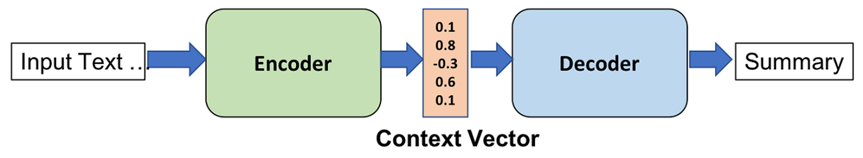

Sequence to Sequence model is the core building block of the Neural Machine Translation. It consist of two parts one is encoder and other is decoder. Encoder encodes the whole sentence word by word and produce a fixed sized vector that represents the meaning of the whole sentence. While decoder use these vectors to generate output. Sequence to Sequence model are capable of generating outputs of sentence of any length and it have shown good performance while determining the semantic as well as syntactic relationship.

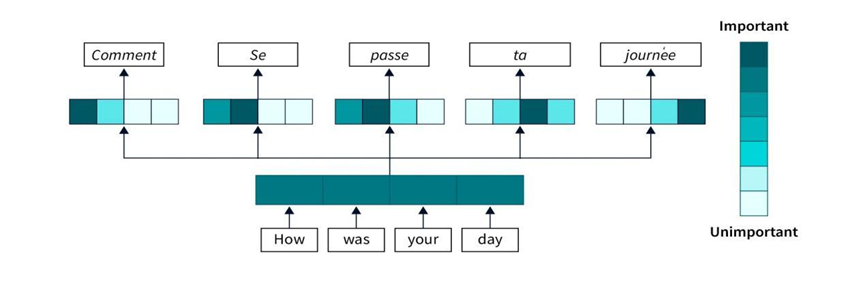

Attention mechanism is a solution to the problem of seq2seq model in dealing with longer sentences. It focuses harder or softer on various parts when producing each word. It merely looks for the relevant words or parts of a sentence to understand what word is about to follow and determine its meaning. It works better for long sentences.

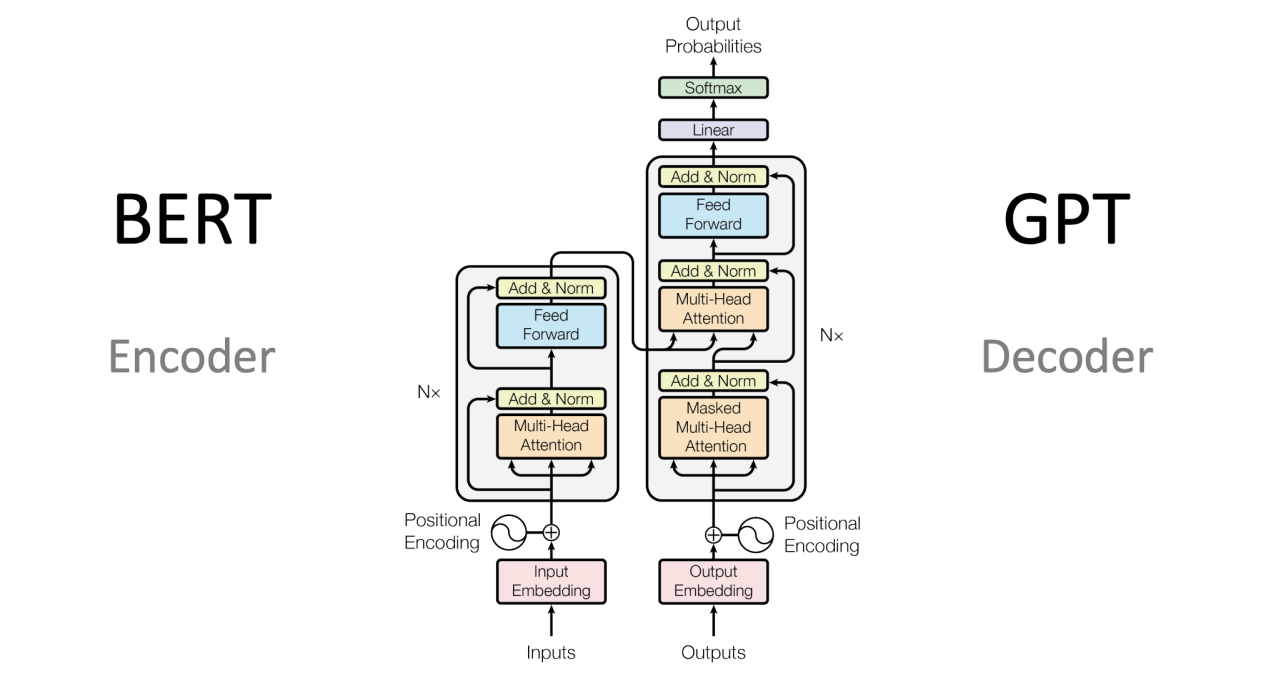

Google released Transformer model that use self-attention mechanism with exciting results for the better machine translation process. Transformer is a Neural Network that maps an input sequence to output sequence It does this by learning what word corresponds to which context and it learns everything from scratch like any other type of dependency between words. Note that transformers utilize self-attention to decide the importance of one word regarding another in a sentence, which helps them keep fine-grained information pertaining words.

BERT or Bidirectional encoder representations from transformers models modify the architecture to process the words in relation with all the other words rather than processing them separately . It works on a mechanism called the masked language model. During its pre-training, bert model masked some inputs randomly and then predict these masked values according to their relationship with other words present in input. This show the accuracy of this model.

GPT or Generative pre-trained transformers models use stacked transformer decoders that are pre-trained on large set of texts and linguistic rules . This model is auto-regressive, because it regress or predict the next value in a sequence based on all values of the sequence. GPT model can predict the next value in the same style and tone that is followed by the previous sequence of words.

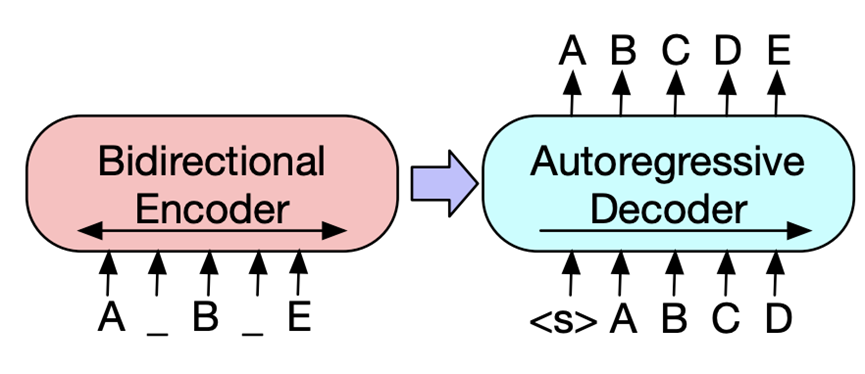

BART Bidirectional and auto-regressive transformer is a type of transformer model that merges properties of both BERT and GPT . It’s like a blend of BERT's bidirectional encoder and GPT's auto-regressive decoder. It reads the entire input sequence at once generates the output sequence one token at a time based on the input provided by the encoder.

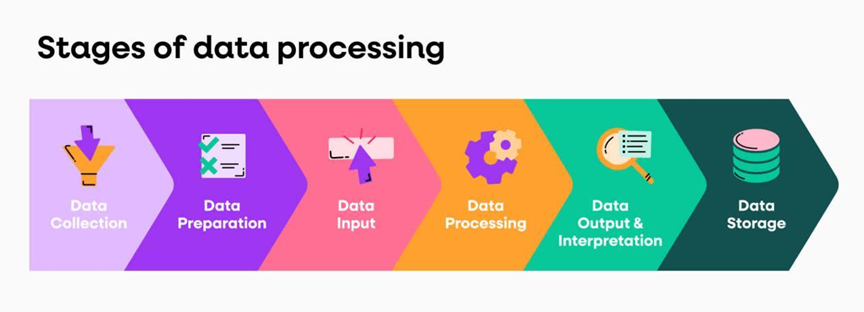

Social media platforms, provide large amounts of user generated text-data in multiple languages needed to train relevant high-quality models. We collect posts, comments and messages as well other user generated content in various languages. The Preprocessing part involves the cleaning and normalizing of text, removing noise or unwanted elements as well as handling vowels errors. This procedure tries to make sure data that model will train on compatible data.

It standardize the data. It converts the whole input in lower letters , check for spellings and abbreviations , removing emojis and hashtags. Normalization reduces the inconsistencies and improves the quality of data for training.

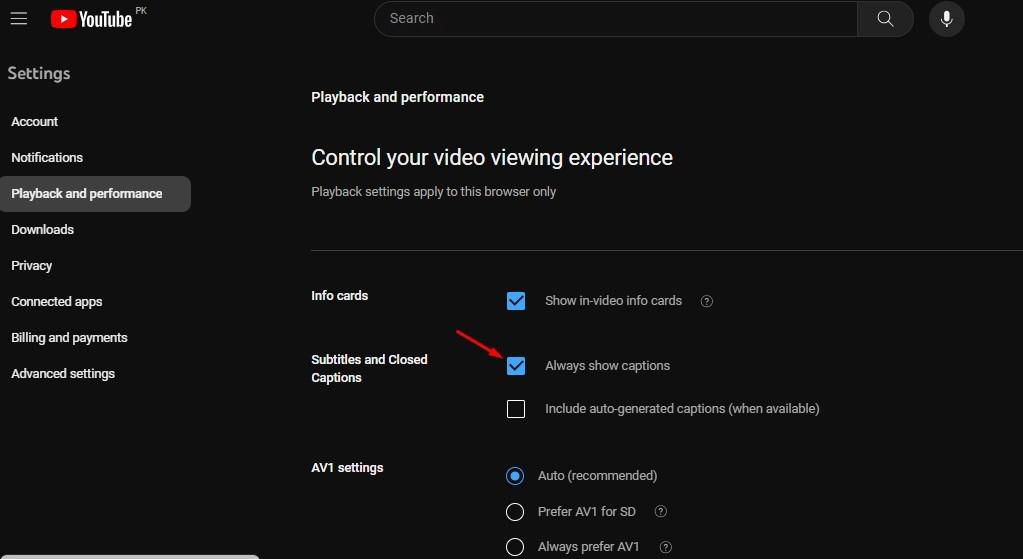

When models are trained they take the form of APIs which are called up by social media platforms when they need real-time translations. These APIs will automatically translate posts, comments or messages. This generally involves sending text to a translation service, receiving the translated output and display it back to users live. The integration enables users to engage with content in their language of choice and without delays.

The user interface is an important element in delivering the translation to our end users. To improve translations, most of the social media platforms has introduced inline translations, popups and different language settings which makes it easy for their users. Where the translated content is displayed directly in the user feed and pop-up only provides a translation when requested. It also allows users to change the language settings for their interactions, so that they can minimize what and how something will be translated.

Active Learning: This functionality enables to collect feedback directly from users about translations with built-in review mechanisms. It assists the translation models to train through active learning, as with all feedback. With the help of active learning, more correct and useful suggestions are collected from users that can be used to retrain models, consequently making them superior in performance. Other human feed back can improve the translation quality and have the models learn new patterns for them to adjust in future predictions.

Challenges: Ensuring low delay and high accuracy to enhance the user experience.

Advancements: Innovations on optimization and high efficiency of the models are going on.

Social media platforms on real-time language translation using machine learning techniques and helping to communicate people across different linguistic barriers. Integrating NMT, Seq2Seq models; attention mechanism and transformers made the translation better as well for user experience. Even today some challenges still exist such as managing informal language, supporting more than one languages and maintaining real-time. Future research and development will continue to address these challenges and improving the capabilities of real-time translation systems.

Powered by Froala Editor