In today’s world, Code generation using AI is transforming how developers write software. With just a prompt, AI models generate code snippets in various languages. In this blog, we will explore how to create our code generation agent using Python. We will use “Salesforce/codegen-350M-mono” via the HuggingFace transformers library. This agent is beginner-friendly and hands-on approach to AI-assisted coding. Whether you are a beginner or a hobbyist, this agent will help you.

To create this agent, we use Salesforce’s codegen-350 M-mono model. Prompts must be given in Natural Language to generate code from plain English instructions. This agent employs the HuggingFace pipeline for easy integration. It also allows control over creativity in output. It sets max_new_tokens to manage output length and uses pad_token_id to avoid tokenizer warnings. Also, a simple command-line interface is used to enter prompts.

Pros | Cons |

Lightweight model, fast and easy to load | 350M model is small; quality varies. |

Straightforward Python implementation | May require manual clean-up of generated code. |

Real-time prompt-response time loop | Limited control over code structure |

HuggingFace simplifies the model integration | Some prompts return irrelevant outputs |

The following are the advantages of this agent:

At first, install the required libraries as shown below.

pip install transformers accelerate torch

The transformers library including its modules AutoTokenizer and CodeGenForCausalLM by Hugging Face simplifies working with pre-trained models. The torch library is used becauseit is indirectiy needed by transformers.

model_name = "Salesforce/codegen-350M-mono"

tokenizer = AutoTokenizer.from_pretrained(model_name,trust_remote_code=True)

tokenizer.pad_token = tokenizer.eos_token

model = CodeGenForCausalLM.from_pretrained(model_name, trust_remote_code=True)

These lines load a pretrained code generation model from Hugging Face called "Salesforce/codegen-350M-mono". First, the tokenizer is loaded, which converts text into token IDs and vice versa. Since the model lacks a padding token, we set pad_token to be the same as the end-of-sequence token (eos_token) to avoid errors during tokenization. Finally, the CodeGenForCausalLM model itself is loaded for generating code from text prompts

def generate_code(prompt, max_tokens=512, temperature=0.7):

inputs = tokenizer(prompt, return_tensors="pt", padding=True)

outputs = model.generate( **inputs, max_new_tokens=max_tokens, do_sample=True, temperature=temperature, pad_token_id=tokenizer.eos_token_id )

code = tokenizer.decode(outputs[0], skip_special_tokens=True)

return code[len(prompt):].strip()

A function called It generate_code is defined to handle code generation from user prompts. It takes a prompt string and tokenizes it into model-readable format using the tokenizer. Padding is enabled to ensure the input is correctly shaped, which is now possible due to the earlier padding fix. The result is a set of PyTorch tensors (input_ids and attention_mask) suitable for input into the model. This step ensures the prompt is cleanly processed before feeding it into the neural network.

print(“Welcome to the Code Generative AI”)

while True:

input1 = input(“\nEnter your prompt or type exit to quit: )

if input1.lower() == “exit”:

print(“GoodBye!”)

break

print(“\nGenerated Code:\n” , generated_Code(input1))

In the main loop, the agent welcomes the user. And then asks for the prompt, or type exit to quit. If the user types exit, it will stop. But if the user enters a prompt to generate a code, the code will be generated along with one sample.

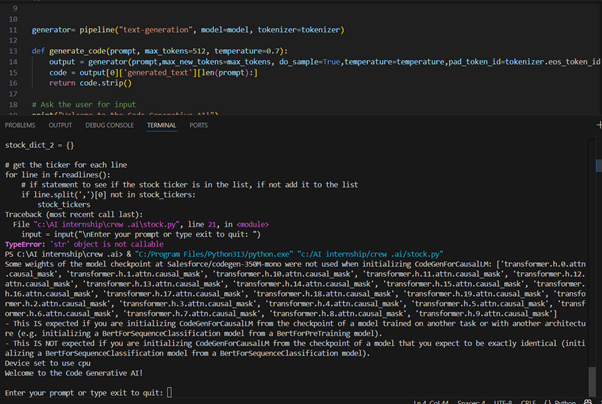

After installing the libraries, the Command Line Interface looks as shown below:

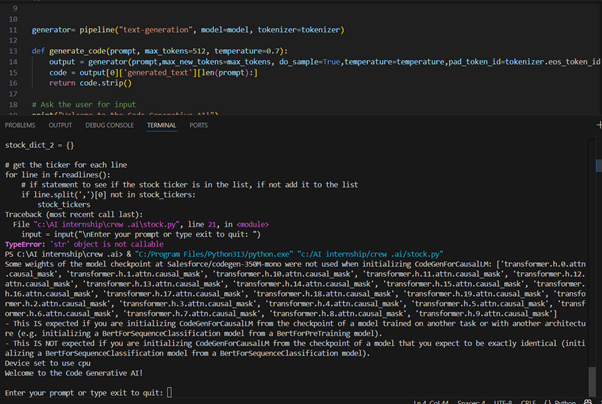

As you can see that the agent asks the user to enter a prompt or type exit to quit, so the user enters a prompt to generate a code for checking whether the given input is even or odd. There are multiple codes that you can generate by using this agent.

Here, the user enters the prompt and presses enter, the agent automatically generates a code for that prompt. The agent creates a function called evenodd() and then generates the main function.

In conclusion, this agent offers a simple and interactive way to generate Python code using natural language prompts. Powered by a pretrained AI model, it’s ideal for learning, quick prototyping, and exploring AI-assisted coding. While it handles basic tasks well, users should review the output, as it may need refinement. Overall, it's a practical and accessible example of code generation with AI.

[1] https://huggingface.co/Salesforce/codegen-350M-mono

[2] https://www.salesforce.com/blog/codegen/

Powered by Froala Editor