When I set out to build BizIntel—a Telegram bot aimed at delivering intelligent business insights—I had one clear goal: to create a system that could reliably understand user queries and provide valuable, context-sensitive answers. However, the road to success was paved with many experiments, failures, and ultimately, breakthroughs. Here’s how the evolution of my approach—from using a GPT‑2 model to fine‑tuning prompt strategies with Llama‑7B—shaped BizIntel into an intelligent bot.

At first, I integrated the GPT‑2 model as the large language model for BizIntel. GPT‑2 was a logical starting point due to its accessibility and the abundance of tutorials available at the time. Despite my high hopes, the model frequently returned responses that were off‑topic and sometimes lacked the precision necessary for business intelligence tasks. The outputs were inconsistent and, not suitable for a professional tool. This experience taught me that even a well‑known model like GPT‑2 might not meet the high standards required for an enterprise‑grade application.

Realizing that GPT‑2 was falling short, I moved on to experiment with Llama‑7B. With its larger parameter size and more modern architecture, I anticipated improved performance. However, while Llama‑7B did deliver responses with more substance, I still found that the answers were not entirely convincing. They often missed key details or felt generic in scenarios that required a deeper understanding of complex business queries. The challenge was clear: I needed the model to “get it right” on the first try—no room for ambiguous or incomplete answers when users depend on the bot for accurate insights.

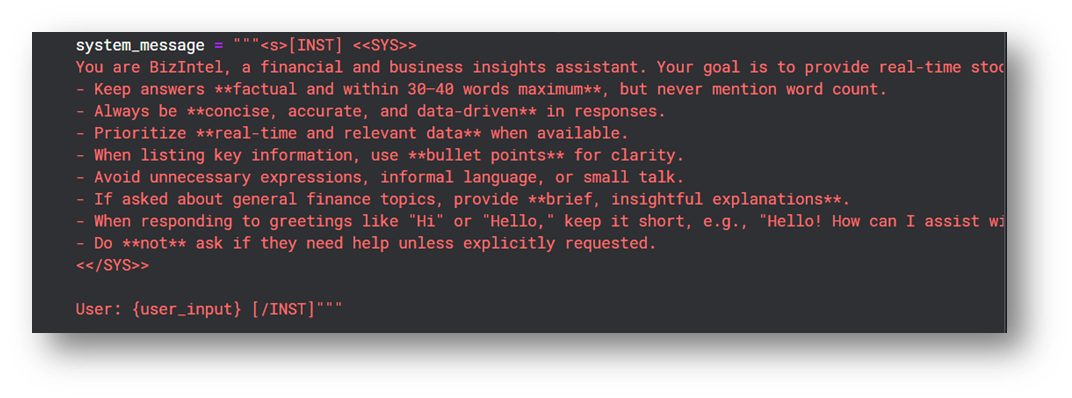

After several rounds of tweaking, I discovered that the secret wasn’t solely in the model choice—it was in how I communicated with the model. I began to explore prompt engineering techniques, specifically the use of a well‑defined system message. By incorporating a dedicated system message into my prompts, I set the context clearly before passing on the user query.

This extra layer of instruction helped the model align its responses with the intended tone, depth, and specificity.

This extra layer of instruction helped the model align its responses with the intended tone, depth, and specificity.

For example, my earlier prompt looked something like this: “User: What are the current trends in the market?” After some iterations, I restructured my prompt to include a system message “Instructions: You are BizIntel, a business intelligence bot that provides insightful, data‑driven responses. Always base your answers on recent market trends and support your conclusions with a clear rationale.

This simple yet powerful adjustment made a huge difference. The responses became more focused, the reasoning clearer, and the overall output much more convincing. This experience confirmed that prompt engineering is not just a nice‑to‑have—it’s essential for tailoring the behavior of large language models in real‑world applications.

Throughout this journey, several difficulties emerged:

Each challenge, however, served as a learning opportunity. It reinforced the importance of not only selecting the right model but also crafting the right “conversation” with it through precise prompts

Each challenge, however, served as a learning opportunity. It reinforced the importance of not only selecting the right model but also crafting the right “conversation” with it through precise prompts

The journey to refine BizIntel was as much about understanding language models as it was about learning to communicate effectively with them. Here are some key takeaways:

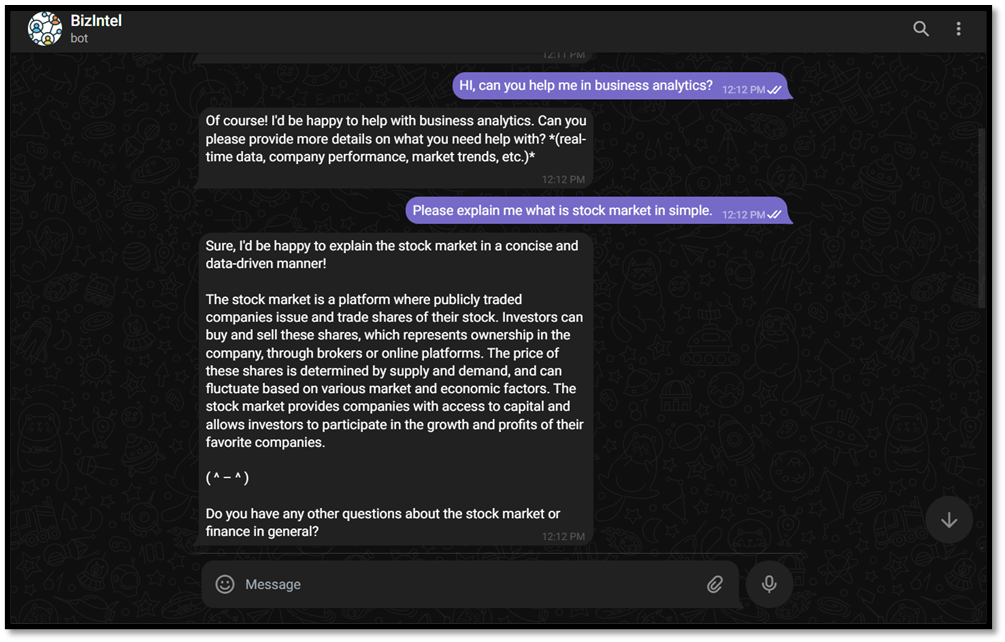

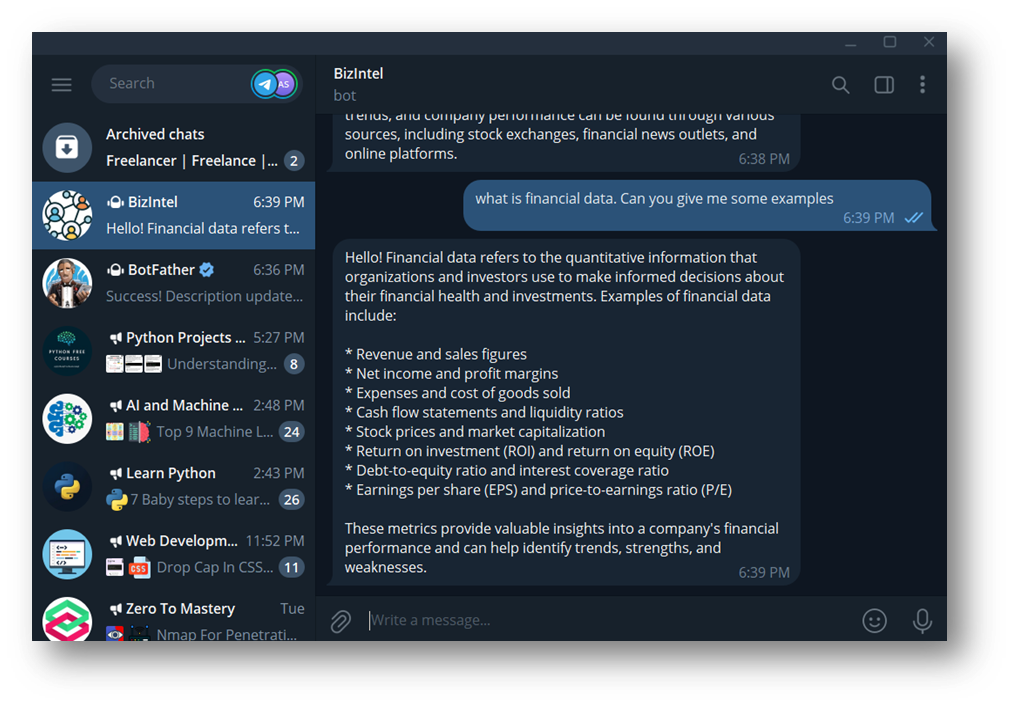

Today, BizIntel is performing reliably as a helpful assistant. The bot has reached a point where it delivers context-aware responses that truly meet users’ business needs, without any overstatement or unnecessary fluff.

But the journey doesn’t stop here. Soon, I’ll integrate Retrieval Augmented Generation (RAG) into BizIntel, an upgrade that will supercharge its capabilities. By combining its existing language model with dynamic external data sources, BizIntel will evolve from a reactive assistant to a proactive partner.

Powered by Froala Editor