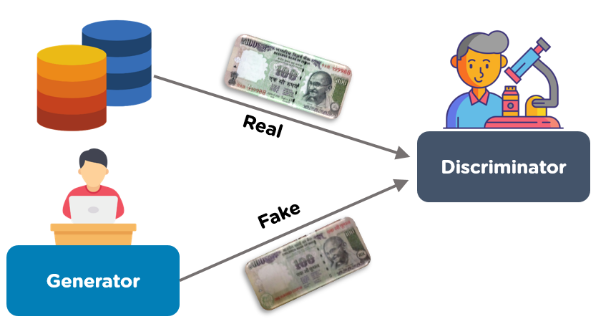

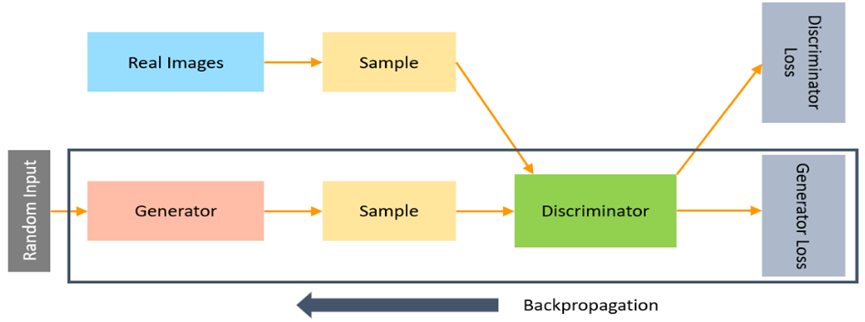

Generative Adversarial Networks (GAN) is the powerful class of the neural network that train two neural networks to compete each other in order to generate the more accurate data from the available data. It is called as adversarial because it performs competition between two different neural networks. One of them is generator which is trained as convolutional neural network and it generates the data based on the noise data provided to it as input. While the other is discriminator which is trained as deconvolutional neural network and it check if the generative output of the generator matches the samples of the dataset or not. It can also be described as discriminator identifies if the generative output is fake or real. If the discriminator identifies the data as fake the generator regenerates it using back propagation. By this way the accuracy of the model increases gradually.

Generative Adversarial Network can be split into three chunks as:

Generative: It refers to the generation of some data which shows that the model will generate something.

Adversarial: It indicates the competition. As two neural networks (Generator & Discriminator) compete with each other in this class therefore this term is used in it.

Network: It represents the presence of neural network which means that it is trained on neural network.

It is the simple type of the Generative Adversarial Network that contains a generator and a discriminator on the basis of the multi-layered neural networks. It’s algorithms optimize the mathematical equation by using stochastic gradient descent, which load the data by loading one part at a time. Generator generates the data while discriminator just identify that the data is part of the dataset or not.

It is the type of GAN that uses Convolutional Neural Networks instead of using multi-layers networks. It uses the convolutional networks with convolutional stride instead of max pooling. This GAN is not using connectivity layers instead discriminator uses LeakyReLU and generator uses ReLU of connectivity.

It is the type of the GAN that works on the deep learning methods but it includes a condition ‘y’. It provides the condition to ’y’ to both generator and discriminator and the results will be evaluated on basis of this condition. Generator will generate the data according to this condition and a copy of this condition will also give to discriminator which will check if the data matches conditions as well or not.

It is the type of GAN that uses multiple layers of Laplacian pyramids to produce high quality scaled images with clear textures and structures. Laplacian pyramid is method that divided the image into sub parts which are scaled. GAN generates an image that is passed to each layer of the pyramid and then after failure move back for scaling.

It uses convolutional networks in such a way to produce high quality of images or enhance the resolution of the image. It is used in optimally scaling the image quality/resolution.

Generative Adversarial Network consist of two important components:

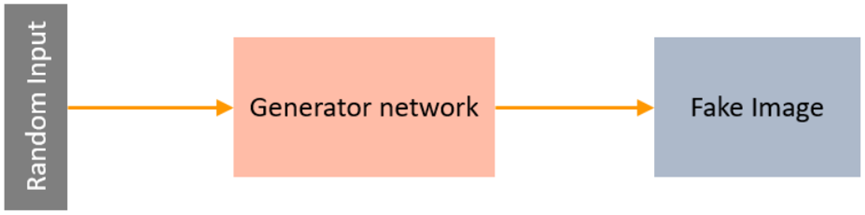

Generator

It is very important part of the GAN that is responsible for the generation data through the noise vector provided to it.

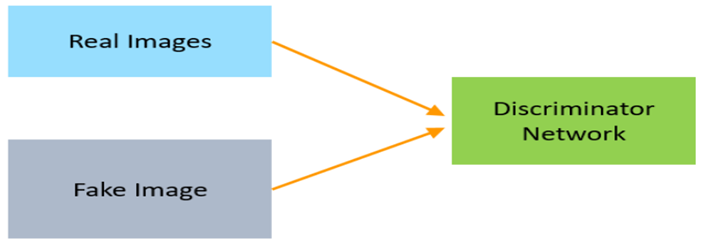

Discriminator

It is the part of GAN that take real data and generated data as input and calculate the probability to distinguish between the real and the fake data generated by the generator.

Model Training

During the process of training generator learns from the data on which it is working and it adjust itself to generate the data that will be more realistic. It improves its output using backpropagation method.

The main goal of the generator is to create/ generate the high-quality data that will be seem as real data and can deceive the discriminator easily. It can be done only when it will minimize its loss function. It is the strength of the generator that how well it fooled the discriminator to believe that the data generated by the generator is real. The generator’s strength can be calculated as:

JG=−m1Σi=1mlogD(G(zi))

Here:

Discriminator Model

Discriminator uses the neural network layers that are effective for the type of the data such as convolutional layers for images. Its main objective is to correctly identify which is real data and which is fake data generated through the generator. It can improve its ability of detection through interaction with generator.

The discriminator’s loss can be calculated as:

JD=−m1Σi=1mlogD(xi)–m1Σi=1mlog(1–D(G(zi))

Here:

The goal of discriminator is to reduce this loss to accurately identify the real and fake data.

MinMax Loss

The MinMax loss formula for a GAN is:

minGmaxD(G, D)=[Ex∼pdata[logD(x)]+Ez∼pz(z)[log(1–D(g(z)))]

Where:

The working of the Generative Adversarial Network includes the following steps:

Step1: Initialization

In the initial step:

Step2: Generator’s Move

After getting a noise vector as input the generator start working on it and convert the noise data into a new data using convolutional neural network. The new generated data is then move to discriminator.

Step3: Discriminator’s Move

Real data and generated data of the generator is provided to discriminator as input. The discriminator calculates the probability whether the generated data is fake or real and provide the output in form of 0 and 1 where 0 represents fake data while 1 represents real data.

Step4: Learning Process

If the discriminator identify correctly that data is real or fake then it means both the generator and discriminator are working well but the improvements required for both to achieve the final goal. Therefore, both are retrained to improve the accuracy.

Step5: Generator’s Improvement

If the discriminator mistakenly called generator’s data as real that it means generator is working well but discriminator is not. Therefore, generator receives an update which improve its generations to create more realistic data.

Step6: Discriminator’s Improvement

If discriminator identifies correctly the fake data of the generator, then it will get penalty and will update itself to identify the fake data with efficiency.

This training process remain continue until the generator will become so accurate that the discriminator will not able to identify its creation. At this point the model is said to be trained and ready to generate the realistic data.

As we know that the Generative Adversarial Network consist of two important components one is generator which create a new data from the noise input while the other is discriminator which identify whether the data entered is real or fake. So, let’s start implementation of GAN:

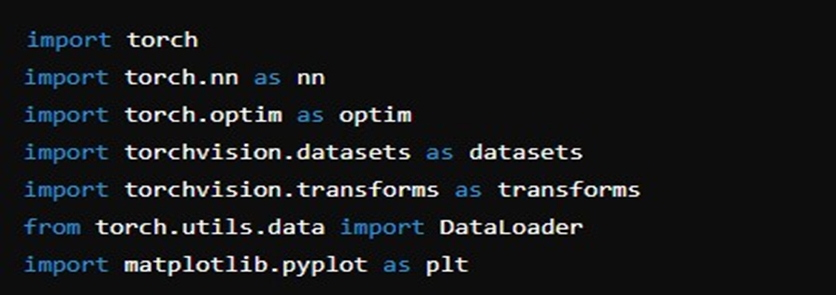

Step1: Imports and Setups

First of all, we will import all necessary libraries to setup enviroment for implementation. We will use torch and torch.nn libraries which are essential for building and training neural networks. Then torch.optim is used for adjusting learning rates ,torchvision which is useful for handling image data and matplotlib for plotting purpose.

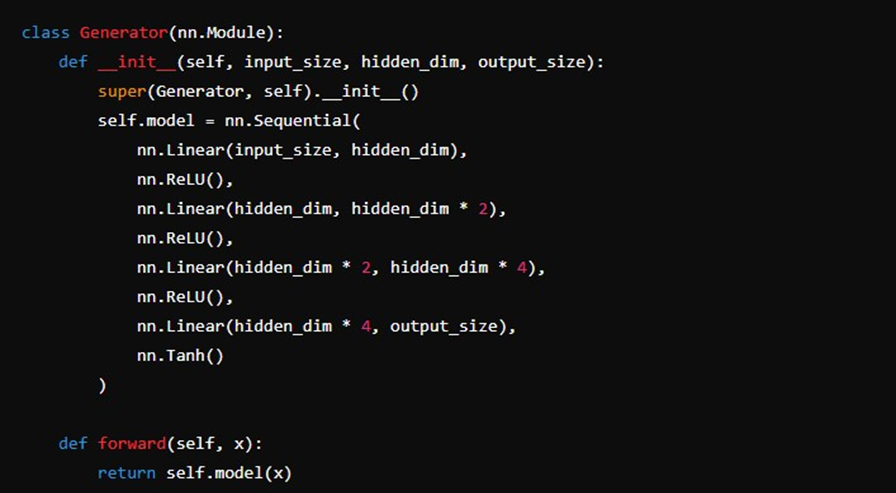

Step2: Defining the Generator

Now generator will be defined and we know that generator takes noise as input and generates a data from it. So, the generator uses ReLU activation, except for the output layer, which uses Tanh to ensure the output is in the range [−1,1].

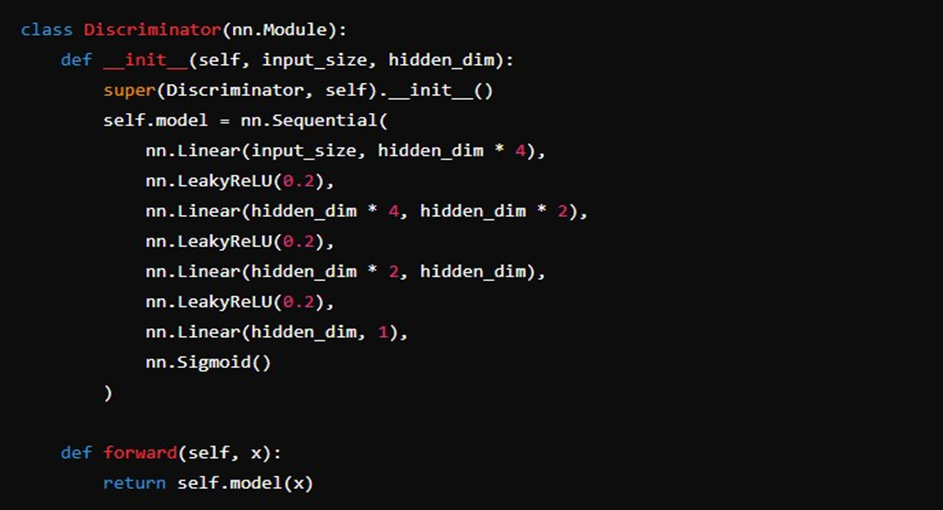

Step3: Defining the Discriminator

Discriminator takes an input (image) and outputs a probability whether it is real or fake. Discriminator uses LeakyReLU instead of ReLU while thefinal layer uses a Sigmoid activation to output 0 or 1

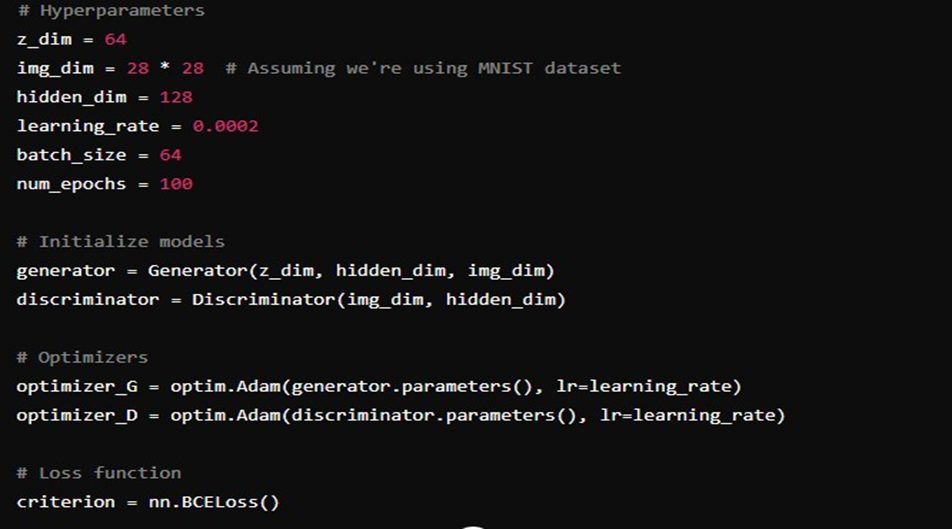

Step5: Training

Now it’s time to setup GAN so this step will include defining hyperparameters, defining the optimizer and initializing the models.

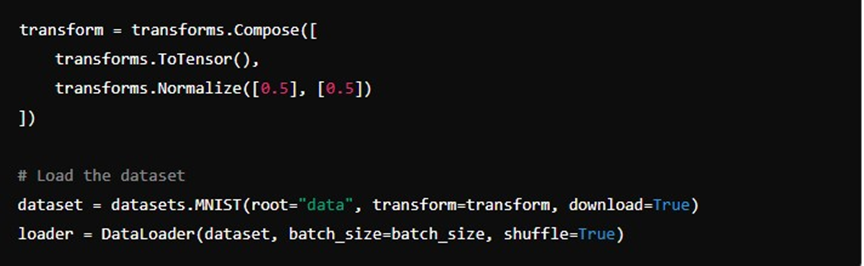

Step 6: Loading the Dataset

We’ll use the MNIST dataset for training, which consists of grayscale images of handwritten digits.

Step7: Training the GAN

Let’s define the loop that will train both generator and discriminator alternatively.

Code:

for epoch in range(num_epochs):

for batch_idx, (real, _) in enumerate(loader):

real = real.view(-1, img_dim).to(torch.device("cuda" if torch.cuda.is_available() else "cpu"))

batch_size = real.size(0)

# Train Discriminator: max log(D(x)) + log(1 - D(G(z)))

noise = torch.randn(batch_size, z_dim).to(torch.device("cuda" if torch.cuda.is_available() else "cpu"))

fake = generator(noise)

disc_real = discriminator(real).view(-1)

loss_D_real = criterion(disc_real, torch.ones_like(disc_real))

disc_fake = discriminator(fake.detach()).view(-1)

loss_D_fake = criterion(disc_fake, torch.zeros_like(disc_fake))

loss_D = (loss_D_real + loss_D_fake) / 2

discriminator.zero_grad()

loss_D.backward()

optimizer_D.step()

# Train Generator: min log(1 - D(G(z))) <-> max log(D(G(z)))

output = discriminator(fake).view(-1)

loss_G = criterion(output, torch.ones_like(output))

generator.zero_grad()

loss_G.backward()

optimizer_G.step()

print(f"Epoch [{epoch}/{num_epochs}] Loss D: {loss_D:.4f}, Loss G: {loss_G:.4f}")

Step8: Generating and Displaying Images

Model have been trained now we can generate images from it.

Code:

def generate_and_show_images(generator, num_images=16):

noise = torch.randn(num_images, z_dim).to(torch.device("cuda" if torch.cuda.is_available() else "cpu"))

with torch.no_grad():

fake_images = generator(noise).view(-1, 1, 28, 28).cpu()

grid = torchvision.utils.make_grid(fake_images, nrow=4, normalize=True)

plt.imshow(grid.permute(1, 2, 0))

plt.show()

generate_and_show_images(generator)

GANs are a game-changing approach in the field of Artificial Intelligence that has the potential to produce super-high-quality data that looks real. It has significant impact on computer vision, creative industries and other real-life fields.

Generative Adversarial Networks (GANs) is a powerful class/model of neural networks consists of two models compete with each other: one is Generator and other is discriminator. Generator generates new data from noise data. While discriminator checks/judge if generated data is real or fake generated by the generator. These two models compete with each other and in the result of this competition the model train well and generator able to create data that will be look more realistic.

There are five types of Generative Adversarial Network which are: Vanilla GAN, Deep Convolutional (DCGAN), Conditional(C-GANS) Location-Pyramid (LAPGANs) and Super Resolution (RSEGANS)s.

GAN train the generator and discriminator through the iterative behavior of both the generator and the discriminator. In this competition both tries to compete each other and in the result, both trained well and generator able to generates the new data that seems to be a real data.

Powered by Froala Editor