There has been fast and mind blowing advancement in the field of Artificial intelligence(AI) and Machine Learning(ML).It has automated a lot of industries and is responsible for the improvement in many fields of real life. AI and ML always get new updates and new changes look forward to improve results of previous approaches.Deep Learning(DL) is a part of ML and has made the decision making and work easier.All of these betterments and work in AI in which new and nuanced techniques are introduced now and then has led to advancement in this feild.

We see a major surge in efficiency and improvement after the Deep Learning emergence . The ML techniques started to fade off. It is not that they are obsolete but their impact has lessened as compared to before. The ML techniques such as support vector machines and decision trees were responsible for a variety of prediction tasks. Deep Neural network (DNN) takes the input and adjust the weights itself and then brings out the output.They are considered as black box for the developer and consumers as well.It is because it is very difficult to elaborate the basis on which results were generated.The ML techniques based on mathematics and statistics are explainable but that is not the case in DL.

DNNs are now mostly viewed as mystery and new science truncated in due to its this behaviour. It is considered as black box as a mystery to users and developers as well.The developers who themselves design the model can not explain it fully that how the results were generated.From the user perspective it will be very untrustworthy and ambiguous perception regarding the results.On the other hand ,developers cannot improve their model as are unaware where the model is lacking.We see biases in the models very often.There are researches in which it was found out that the model was bias to black defendents. When it was checked which defendant will be pertaining to commit crime again the system evaluated more black men over white men to be committing the crime again.Such inaccuracy and biases only loses the integrity of the system.Another example is the case that happened in amazon.They found out that the system was discriminating in the male and female candidates applying for the software and other positions.They were not treated equally.

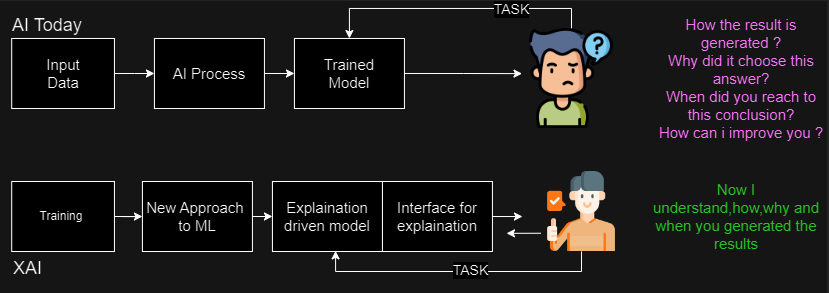

All of this conversation and issues lead to the need of an evaluation and check system over the AI being created.The purpose of it is to make the AI explain itself and the results it generated.Explainable AI(XAI) is a new field and it helps make explanations of the results generated.

The XAI has become an attention centre for many fields.It includes the AI users as well as the researchers.It is to create trust and help in the comprehension of the models.The challenges that are being faced by them as various.Some of which includes the explainability of the models that how it generated the results.The behaviour of the model is unknown and how the tasks are being performed by it.We need new and updated evaluation metrics to know about the performance and the working of the model.The model does not have the capability to tell how it achieved the given results.

History of XAI:

If we look into the history of the XAI it was first introduced by DAPRA(Defense Advanced Research Projects Agency) in 2017.They establish it and aimed at how it can be applied.Focusing on the new techniques and methods that can make the model intelligent enough to explain itself.They defined XAI as the system that is answerable to human and working for it as well.It will be able to showcase it strength and weakness.They should also be able to give the idea about the future working as well.This is because we all know that the real time working model learns and evolve due to which it is very important to have idea regarding the future perspective.In 2018 The European Union's General Data Protection Regulation (GDPR) also took step towards the “right to explanation” for the ones who faces challenges in the decisions or results generated by the algorithm.

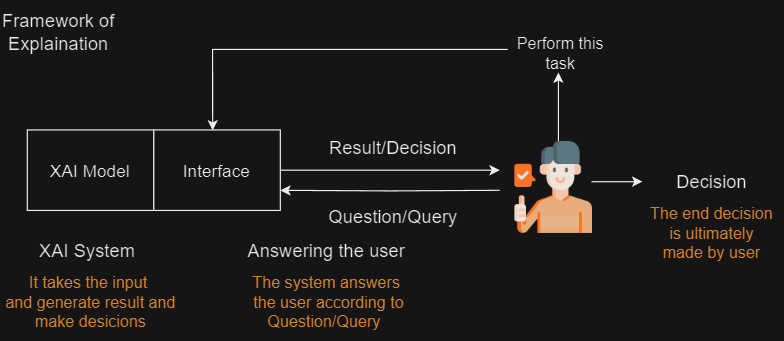

The benefit of this approach is that the model has completed its due task and has the ability to give relevant explanations as well.It is very similar to a friend or a colleague who has the idea of the situation.They have taken in your views(input) and has response(output) according to that.The model has given its point of view and reasoning for its decision just like human being.

Types of XAI:

When we talk about the models and the explanation.There are some which are based on mathematics and statistics.Their formulas and explanations are straight and can be evaluated.Such models are called as the transparent models.They include the Linear regression as its a simple model and can be easily interpreted using graph.Other is Naive Bayers one which is based on the Naive Bayes Theorem.Third is the Decision Tree which is based on Yes and NO approach and tree like structure.A graph is what’s needed for the explanation of such easy and interpretable approaches .Other type are Post-Hoc Explanation.They are the models which require explanation and interpretability. Complex and Black box models are included in it and we need to interpret them.For this purpose we use post hoc explanations. Post-Hoc-Explanation is further divided into Model Specific and Model Agnostic Techniques:

Model Specific:

As the name suggests model specific techniques comprises of the XAI techniques that can be used with the specific type of model.They cannot be used for the interpretation and explanation of other type of models.They are need specific and used accordingly.It is due to particular dependencies that they cannot work with all the models.Some of the example of the model specific explanation framework consist of the CAM(Class Activation Mapping),GRAD-CAM (Gradient-weighted Class Activation Mapping) and Deep LIFT(Deep Label-Special Feature Map).CAM gives the image regions that has the most part or contribution in the result generation.It performs this task by looking in to the activation map that the CNN(convolutional neural network) has on the last layer.GRAD-CAM is the advancement in the CAM as the name suggests.It utilizes the gradients as well as the feature maps from the last CNN layer.

Model Agnostic:

As we look into the techniques Model agnostic are the ones that can be used with any model.They get the information using the prediction procedure.They are not dependent on a particular model and can be used with any type of model.They help provide insights to the decision made by AI without need for the user to access the internal working of it.It provides flexibility and applicable to wide range of machine learning and deep learning models.It helps promote transparency,accountability and trust in AI systems.It consist of the explanations which are specific to a certain point in data as well as the explanation for the whole data as well.It two types include:

It is type of the agnostic method of the XAI.It gives the local explanation means that it gives explanation related to particular instance.By local it means a small area or an instance.It includes LIME(Local Interpretable Model-agnostic Explanations),SHAP(SHapley Additive exPlanations).LIME consist of the set of instances that is in the neighbourhood of the specific prediction.That surrounding data in then used to train a simple model which is interpretable.That model can be of linear regression .It gives the approximate behaviour of the model based on its surroundings. SHAP Is based on cooperative game theory.It consists of the contribution of each player .The greater contribution the player has made the big reward they will get. If we talk about in case of DL contribution of each instance matters and results are generated based on it.The greater SHAP value a feature has the big contribution it is making in the result generation.

Another approach in the model agnostic techniques is overall and global explanation,This includes the overall model explanation and why it made such decisions.The researchers have tried to used the local techniques and generate global explanations using them.The main pro of this approach is that it leverages from the local results and use and derive global approach.SP-LIME is a variant of LIME as the name suggests.It is used for global explanation using the local information.A explanation matrix is used which gives the global result from the LIME.All of the localised values are present in it. SHAP for trees is another approach which was given using SHAP values.In it a tree explainer framework is used.The SHAP values are designed in such away that the global results can be generated.Another technique is the GAA(Global Attribution Analysis).It came into the view in 2019.Each global attribution explains the specific part of the model that in the end results to the global explanation of the model.

In the conclusion I would like to add that XAI has given the power for th expression of the models.It has made the models and AI more intelligent as now it has the capability to express it self.It can explain itself like other humans when we have point of view.Its types are explored in this blog which based of specificity,local and its global approach.We can use whatever suits our need and use it to make the model answerable and explainable.

Powered by Froala Editor